Sponsored by IBM

Are you a z/OS system programmer, capacity planner, or mainframe professional looking to simplify and reduce the skills required to optimize your workload management (WLM) batch workloads? If yes, read on to learn how AI-powered WLM will help you and early tenured mainframe professionals, manage workload spikes to reduce impact on system performance and help meet SLAs.

Imagine it’s Monday at 9:00 a.m. and a spike of 50 batch jobs arrive and cannot be processed immediately, as the required number of WLM initiators responsible for executing those jobs need to be started first (see Figure 1). The ramp-up of WLM initiators is reactive and happens every 10 seconds until enough are available, which can lead to long wait times and performance issues.

Figure 1 — WLM managed initiators, reactive approach

When you leverage AI-powered WLM batch initiator management, you can use AI to predict similar batch workload spikes and have the system react proactively by allocating the right resources. Thus, you can eliminate manual fine-tuning overhead and trial-and-error approaches.

How Does AI-powered WLM Work?

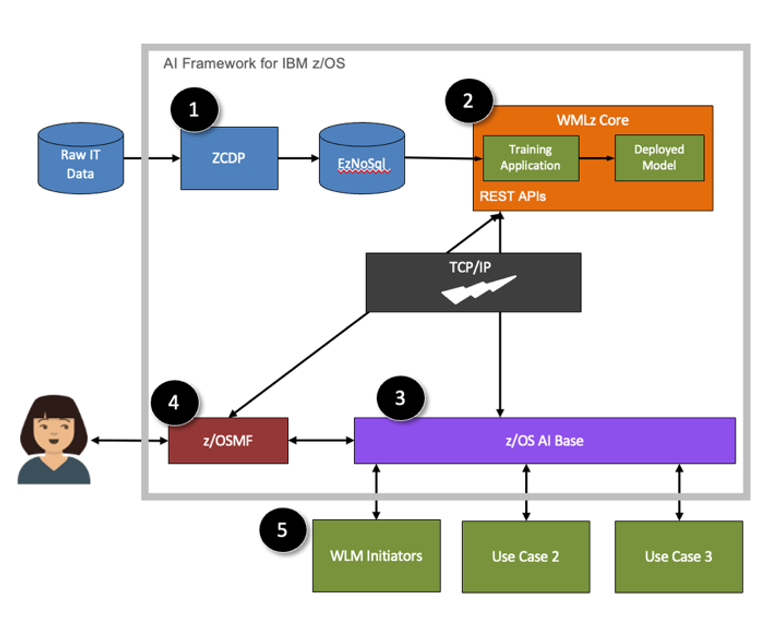

Available in IBM z/OS 3.1, you configure it in a way that AI learns from your company’s IT data and workload patterns. Using System Management Facility (SMF) type 99 subtype 2 data, you trigger the training of a pre-built large-language model (LLM) delivered with the AI Framework for IBM z/OS (see Figure 2, step 1). The model is trained by taking an action in the z/OS AI Control Interface.

Figure 2 — Predicting upcoming workloads to optimize required resources

Once trained, an AI model familiar with your work patterns is automatically deployed. When WLM receives and ingests real-time data automatically (see Figure 2, step 2), it can use the deployed model to send inference requests and predict upcoming workloads (step 3). Step 4 (see Figure 2) allows the system to forward the predictive insight to WLM, which will automatically start or stop initiators based on the prediction, then adjusts the number of initiators and assigns resources proactively.

When a similar batch spike arrives the following Monday at the same time (see Figure 3), the necessary number of WLM initiators are made available earlier than without AI-powered WLM, resource assignment of the correct priority is done, and the workload processes immediately.

Figure 3 — WLM managed initiators with a proactive approach

Do Users Need AI/Data Science Skills?

No AI or data science skills are required to use these AI capabilities in z/OS. Your team will have full control of the AI via simulation mode and can enable and disable modes without losing batch jobs or missing the chance to execute some of them during the switch from one mode to the other.

Simulation mode stops the AI processing at step 3 (see Figure 2), which allows you to gain predictive insights without automated action. Enable mode continues with step 4 (see Figure 2), which forwards the predictive insight to WLM to take the initiator adjustment action. Using simulation mode, you can use SMF 99 records to compare predicted vs. actual batch jobs to gain trust and decide whether to enable the intelligent operations.

A Look Under the Hood

Figure 4 — z/OS AI Framework architectural components and data flow

To leverage AI-powered WLM, IBM z/OS 3.1 offers the z/OS AI Framework that enables the AI model to become operational. The framework acts as a machine learning operationalization (MLOps) platform for AI infusion into IBM z/OS use cases. It consists of different components illustrated in Figure 4.

1. Z Common Data Provider (ZCDP) filters and transfers SMF 99 subtype two records for training into EzNoSQL for z/OS. EzNoSQL is a component of DFSMS that serves as the data store to hold data for training the AI models. ZCDP also transfers real-time data for prediction. This data is used when a system programmer opts to train the system.

2. Machine learning for IBM z/OS core edition (MLz Core), a subset of MLz Enterprise Edition, hosts the WLM training application. It automatically deploys the trained model and executes the inference requests coming from WLM to predict upcoming workloads.

3. AI base component for IBM z/OS (z/OS AI Base) facilitates the communication between WLM and MLz core. It intercepts each WLM inference request every 10 seconds, forwards it to MLz Core, gets the inference result, and sends it to WLM.

4. AI control interface for IBM z/OS (z/OS AI Control Interface) is a z/OSMF plug-in that triggers training, re-training, and three AI modes.

5. Beyond AI-powered WLM, the framework is designed to pave the way for future AI use cases.

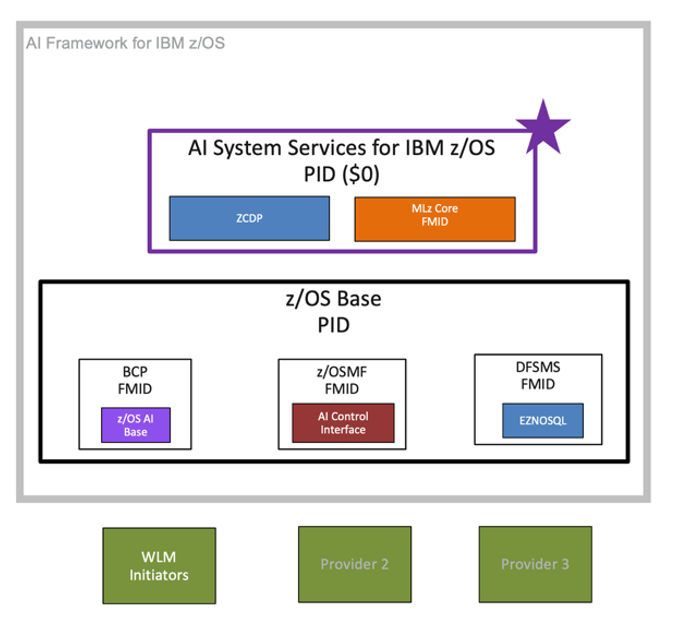

How Is the z/OS AI Framework Delivered?

Figure 5 — Delivery of AI Framework components

IBM z/OS 3.1 includes three z/OS AI Framework components, namely EzNoSQL, z/OS AI Base, and z/OS AI Control Interface (see Figure 5). The remaining components are delivered with AI System Services for IBM z/OS, a bundling offering that supports AI infusion into z/OS base components only, available for no additional fee.

AI System Services for IBM z/OS includes a subset of the z/OS AI Framework capabilities to support AI infused into z/OS. It delivers support of key AI lifecycle phases including data ingestion, AI model training, inference, AI model quality monitoring, and retraining services. It integrates seamlessly with the other z/OS AI Framework components to build an AI platform that supports initial and future intelligent z/OS management capabilities.

3 Steps for Getting Started

1. Install Prerequisites

z/OS AI Framework requires an IBM z14 or newer model processor, z/OS 3.1 base product, and the AI System Services for IBM z/OS (PID 5655-164). EzNoSQL, based on VSAM Record Level Sharing (RLS), requires an internal or external coupling facility. For more information, see the hardware/software requirements and tuning guidelines.

2. Collect Data

Start collecting your SMF 99 subtype 2 data, as 30 days of contiguous data are required by the pre-built training application to train the AI model.

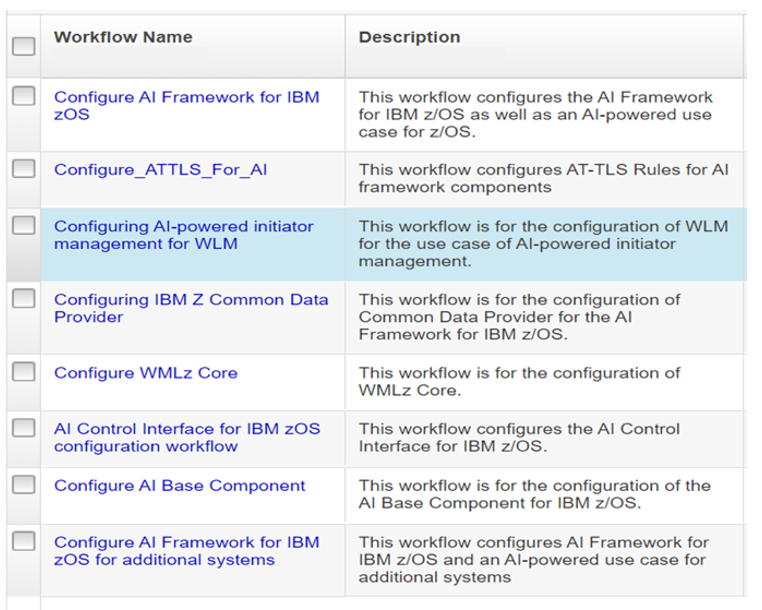

3. Configure

The z/OS AI Framework configuration workflow provides your infrastructure team with step-by-step guidance to set up the different components and AT-TLS security rules (see Figure 6). Optionally, add another system to your z/OS AI Framework using the “Configure AI Framework for IBM z/OS for Additional Systems” workflow.

Figure 6 — z/OS AI Framework configuration workflows

Why Start Today?

AI-powered WLM will help you manage your batch workloads and meet your SLAs. According to clients, it’s a safe place to learn and evaluate AI infused into z/OS, and these AI capabilities help demonstrate simplification, modernization, and innovation of IBM z/OS. Explore these capabilities to prepare for future use cases.

For more information, visit:

Khadija Souissi is principal product manager responsible for AI for IBM Z. She holds a master’s degree in electrical engineering, is a technical specialist and has a very rich experience working with clients as a subject matter expert in the area of data and AI on IBM Z. In this position, she delivers and designs AI and analytics solutions for IBM Z clients. In her current role, she is responsible for delivering new AI capabilities. She acts as the interface between development and clients, e.g., validating AI capabilities with clients to deliver relevant offerings to the market.

Iris Rivera is design lead and senior design researcher responsible for AI for IBM Z. Iris earned a bachelor’s degree in information technology with a concentration in human-computer interaction from Rensselaer Polytechnic Institute. Ms. Rivera has specialized in user-centered design for the last 20 years at IBM and is committed to understanding and advocating for her users to design solutions to address their needs. She is a co-founder of zNextGen at SHARE, a user-driven networking community for new and emerging mainframe professionals.