The mainframe’s longevity is often misconstrued by those outside the field to mean that the technology is outdated and in need of modernization. However, as a modern computer technology it has evolved over time. DevOps tools and plugins have likewise grown every day. Mainframe developers can work together using the best engineering practices and the most popular integrated development environments (IDEs) for their z/OS code development. “DevOps has a culture of automation and collaboration, as well as cool tools,” says SHARE Fort Worth Best Session winner Yuliya Varonina, DevOps and test automation engineer at IBA Group, who presented the session “DevOps for DevOps” with Dzmitry Rabau, DevOps engineer at IBA Group.

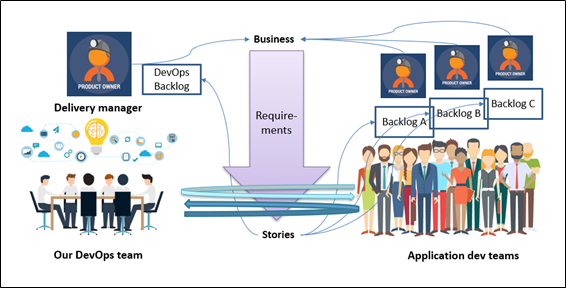

“Let's imagine that you need to meet your customer’s requirements quickly, piece-by-piece, and with the highest quality. What are you going to do?” Varonina asks. “You will collaborate with your team to find the best tools and apply a new methodology, and you will try to automate all repetitive manual tasks to achieve your client’s goals.” Many mainframe users are moving away from traditional mainframe paradigms, such as “waterfall” development, toward DevOps to create a more agile organization.

Where to Start with DevOps

Version control systems (VCS) are the base from which to build DevOps infrastructure and are a starting point for a developer. The DevOps process usually begins as soon as changes in the VCS are detected. “As DevOps engineers, we must provide and configure the pipeline within the existing version control system. If there is no version control system, we can help developers configure it and migrate the code there,” explains Varonina. “DevOps engineers suggest the structure of branches and configure all necessary code checks. Developers must deal with their daily tasks.” She also adds, “We need to understand, which artifact we are working with and to which version we need to roll back in case of failure.”

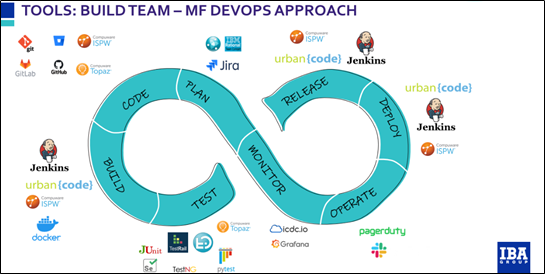

On a daily basis, DevOps members use system connectors like z/OSMF or an FTP interface, Tools APIs, test management tools, code scanning tools (like Sonar Cube, Polaris, Black Duck), infrastructure scripts like bash or shell, and tools to build CI/CD pipelines (IBM Urban Code Deploy, Jenkins etc.) and many others.

IBM Urban Code Deploy (UCD) and the CI/CD tool are key tools for developers used to create pipelines for code deployment in different environments. “The main advantage is that it is integrated with the mainframe. The z/OS plug-in can run TSO commands, store and deploy MVS datasets, submit JCL, and more,” says Varonina. “There is also support for other platforms — Linux, Win, and Cloud. This enables the use of different machines in one pipeline and significantly widen deployment approach. One of the base features we use is Codestation.” She explains that this is a special repository, located on the UCD server for storing all deployed artifacts, and it is suitable both for mainframe and distributed files. “Taking into account that our project historically doesn’t have a version control system, storing code changes in this repository helps us track changes and repeat deployment if needed,” says Varonina. Unlike Jenkins, one of the most popular CI/CD tools, all processes are configured using a drag-and-drop mechanism, not scripting.

According to Varonina, “DevOps has to be everywhere. It’s a starting point to put all your DevOps processes and scripts to VCS and begin testing them with test automation for DevOps development.”

Elements of the Code Assembly Process

The code assembly process is a fairly complex operation designed to create a complete artifact, explains Varonina. Continuous integration is a part of a pipeline where developers integrate new code changes into the existing code after passing quality checks. Continuous deployment is a code-promotion process during which DevOps applies code changes automatically in the required infrastructure. Both are part of the code assembly process. The goal of this process is to create a completed and packaged code change that will maintain the integrity of the changes in a working state after applying them to the environment, she adds. IBA uses Buztool, RDz (IBM Rational Developer for z Systems), and other utilities for mainframe code. Some teams, for example, also use REXX to create their own custom script for their product-build automation. Autotesting is another prerequisite for building an end-to-end DevOps process of proper quality. The tests reduce the delivery time, as well as eliminate human error. Varonina says, “Enabling autotests in the pipeline at all levels provides quick feedback on the quality of changes and is a good practice.”

IBA Group is working on a Global Repository for Mainframe Developers to promote the best engineering practices at our Mainframe Center of Excellence. This repository contains a list of scripts, templates, and commands that our 500 mainframe developers like using, and IBA plans to publish the information in the open source mainframe community soon. Varonina adds, “To further aid DevOps, IBA has created the IntelliJ IDEA Plugin for Mainframe to enable developers to play with mainframe code, using the IJ IDE IBA mainframe team prefers, and maybe others will like it, too.”

For many developers, the mainframe looks like an out-of-date system because infrastructure tasks can be complicated, explains Varonina; however, the mainframe continues to evolve, enabling more developers to learn and share their own expertise. She adds that, like others in the industry, she is always willing to mentor others interested in the mainframe. According to Varonina, IBA holds DevOps hackathons that help generate new ideas. “I see a bright future for DevOps and mainframes alike,” she adds. Like the mainframe, DevOps is always evolving to ensure that client needs are met effectively and in the least time-consuming way.